Whole-brain, bottom-up neuroscience: The time for it is now

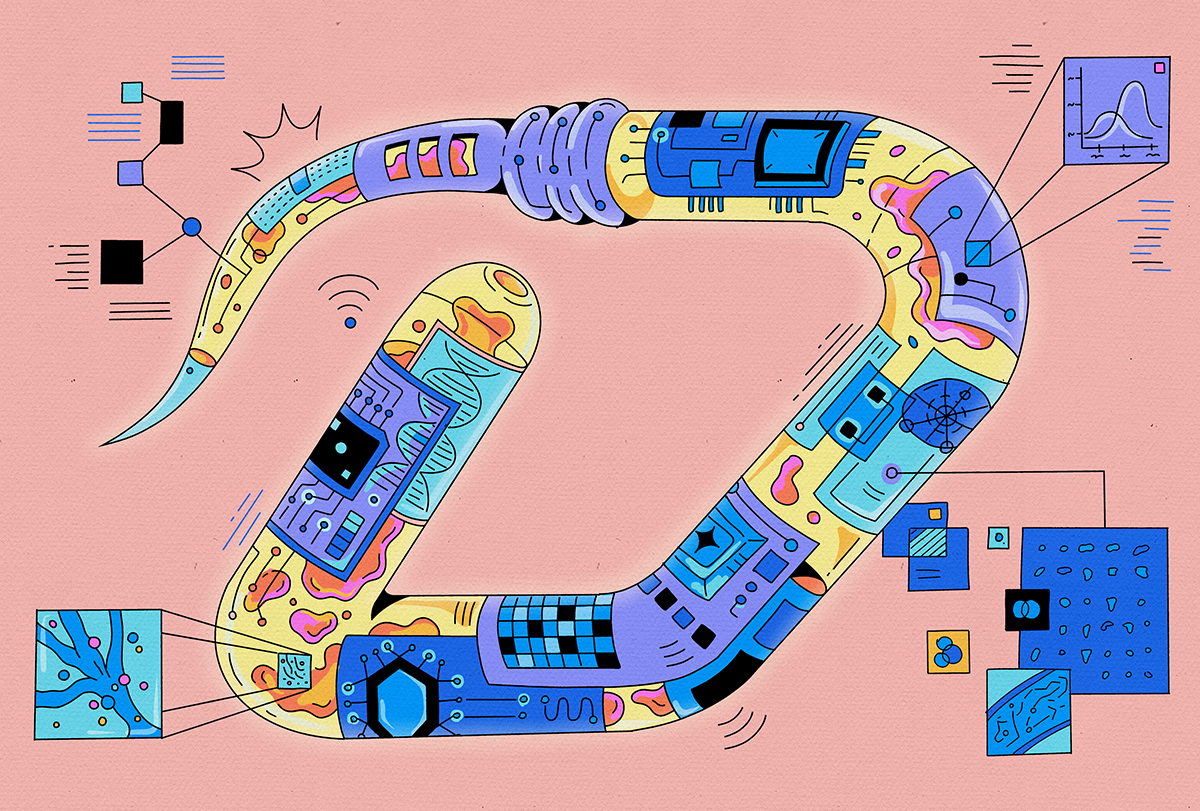

Here we outline an approach that we think will enable dynamic observation and control of entire brains, as well as the ability to map at the level of molecules, wires and neurons, and we describe how such datasets could yield bottom-up models. To make the argument concrete, and to provide a practical test bed for a first actual attempt, we focus on the worm Caenorhabditis elegans. Cracking the worm would help us see how molecular recipes generate neural physiology. And it would enable us to understand how neurons work together to yield emergent brain functions. We also outline how these approaches might scale to larger brains—including the larval zebrafish, the mouse and even the human—in the years to come.

W

ith its 302 neurons, C. elegans feeds, mates, flees and makes complex decisions.

An electron microscopy-derived connectome of the worm has been available for decades. More recently, researchers have developed gene-expression profiles of all the cells of C. elegans and recorded and stimulated, with single-cell precision, activity throughout the worm’s nervous system. These datasets, though impressive, have limitations. They are too small to use to build whole-worm models that describe the behavior of the worm nervous system from neuron-neuron interactions, and they were collected from different worms, making it difficult to integrate molecular, wiring and activity data.

Collecting the data needed to create a whole-worm model would require running multiple kinds of experiments on the same animal and acquiring data from many animals (Box 1). New tools are making this possible. Both brain mapping (mapping all neurons, their synapses and associated molecules) and control technologies (stimulating and recording all neurons) are already operating at the scale of the entire nervous system of C. elegans.

To decipher molecular recipes, researchers could, in principle, apply expansion microscopy pipelines, with machine-learning-facilitated connectomic tracing, protein-shape imaging, multiplexed antibody staining or subcellular spatial transcriptomics to a complete C. elegans. How do we learn what each molecule actually does so that we can convert images of C. elegans into a simulation? One possibility is to estimate how dynamics within, and interactions between, the mapped molecules give rise to function, using AlphaFold-style or other kinds of computational modeling.

Another strategy would be to study molecular interactions and dynamics through optogenetic protein perturbation and multiplexed live imaging, either in native cells or after reconstitution in heterologous systems. Of course, there might be many molecules we don’t know about yet: Machine learning could help us infer some of these, based on the ones that we do see and perturb. Such datasets could yield bottom-up biophysical models of how the molecules of a neuron give rise to its emergent physiology, via cable equations based on molecular location and kinetics, for example.

To understand how neurons give rise to brain dynamics, we can treat individual cells as building blocks and model their interactions through synaptic and non-synaptic signaling. This could be done by extending the bottom-up models of the previous paragraph into networks of neurons. We could also probe causality by optogenetically perturbing the electrical activity of single neurons (or ensembles) while recording voltage or calcium signals in the rest of the network. When we perturb neuron A, what does it do to neuron B? If we could measure those parameters, we could simulate how the neurons work together in networks.

First Appeared on

Source link