first look • The Register

hands on Nvidia bills its long-anticipated DGX Spark as the “world’s smallest AI supercomputer,” and, at $3,000 to $4,000 (depending on config and OEM), you might be expecting the Arm-based mini-PC to outperform its less-expensive siblings.

But the machine is far from the fastest GPU in Nvidia’s lineup. It’s not going to beat out an RTX 5090 in large language model (LLM) inference, fine tuning, or even image generation — never mind gaming. What the DGX Spark, and the slew of GB10-based systems hitting the market tomorrow, can do is run models the 5090 or any other consumer graphics card on the market today simply can’t.

When it comes to local AI development, all the FLOPS and memory bandwidth in the world won’t do you much good if you don’t have enough VRAM to get the job done. Anyone who has tried machine learning workloads on consumer graphics will have run into CUDA out of memory errors on more than one occasion.

The Spark is equipped with 128 GB of memory, the most of any workstation GPU in Nvidia’s portfolio. Nvidia achieves this using LPDDR5x, which, while glacial compared to the GDDR7 used by Nvidia’s 50-series, means the little box of TOPS can run inference on models of up to 200 billion parameters or fine tune models of up to 70 billion parameters, both at 4-bit precision, of course.

Normally, these kinds of workloads would require multiple high-end GPUs, costing tens of thousands of dollars. By trading a bit of performance and a load of bandwidth for sheer capacity, Nvidia has built a system that may not be the fastest at any one thing, but can run them all.

Nvidia isn’t the first to build a system like this. Apple and AMD already have machines with loads of LPDDR5x and wide memory buses that have made them incredibly popular among members of the r/locallama subreddit.

However, Nvidia is leaning on the fact that the GB10 powering the system is based on the same Blackwell architecture as the rest of its current-gen GPUs. That means it can take advantage of nearly 20 years’ worth of software development built up around its CUDA runtime.

Sure, the ecosystem around Apple’s Metal and AMD’s ROCm software stacks has matured considerably over the past few years, but, when you’re spending $3K-$4K on an AI mini PC, it’s nice to know your existing code should work out of the box.

Note that the DGX Spark will be available both from Nvidia and in custom versions from OEM partners such as Dell, Lenovo, HP, Asus, and Acer. The Nvidia Founder’s Edition we reviewed has a list price of $3,999 and comes with 4TB of storage and gold cladding. Versions from other vendors may have less storage and carry a lower price.

The spark that lit the fire

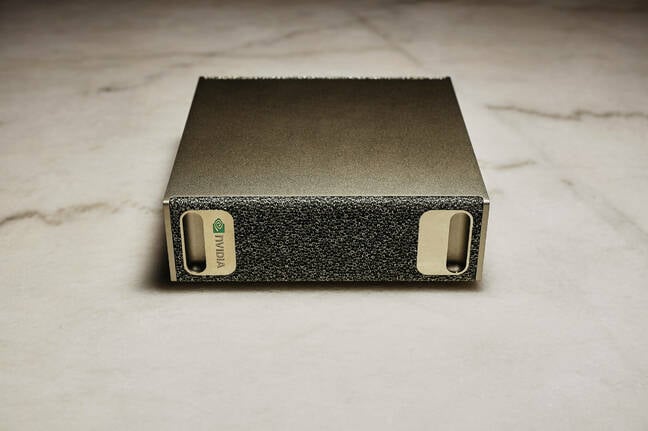

The machine itself is styled like a miniaturized DGX-1, measuring just 150 x 150 x 50.5 mm in size and this is no mistake.

In 2016, Nvidia CEO and leather jacket aficionado Jensen Huang personally delivered the first DGX-1 to Elon Musk at OpenAI. The system, as it turns out, was the Spark that lit the fire behind the generative AI boom. On Monday, Huang visited Musk once again, this time with a DGX Spark in hand.

The DGX Spark’s golden design is clearly inspired by the original DGX-1 system hand delivered by Jensen Huang to Elon Musk at OpenAI in 2016 – click to enlarge

As mini-PCs go, the Spark features a fairly-standard, flow-through design which pulls cool air in the front through a metallic mesh panel and exhausts warm air out the rear.

For better or worse, this design choice means that all of the I/O is located on the back of the unit. There, we find four USB-C ports, one of which is dedicated to the machine’s 240W power brick, leaving the remaining three available for storage and peripherals.

Alongside USB, there’s a standard HDMI port for display out, a 10 GbE RJ45 network port, and a pair of QSFP cages which can be used to form a mini-cluster of Sparks connected at 200 Gbps.

Nvidia only officially supports two Sparks in a cluster, but we’re told there’s nothing stopping you from coloring outside the lines and building a miniature supercomputer if you are so inclined. We’ve certainly seen weirder machines built this way. Remember that Sony Playstation supercluster the Air Force built back in 2010?

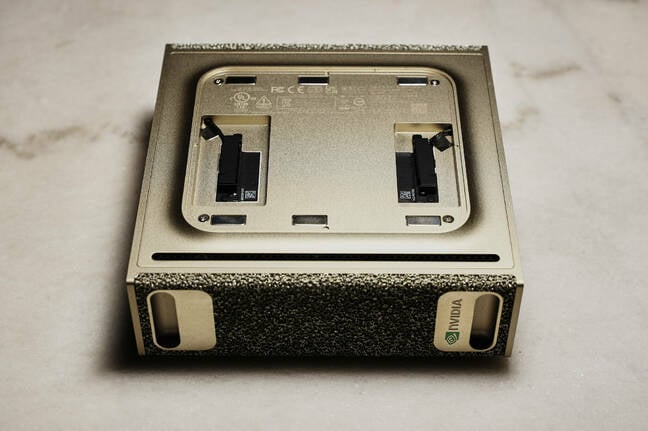

The bottom on the Spark features a magnetically attached cover, but all that’s hiding underneath are some wireless antennas – click to enlarge

At the bottom of the system, we find a fairly plain plastic foot that is attached using magnets. Pulling it off doesn’t reveal much more than the wireless antennas. It seems that, if you want to swap out the 4 TB SSD for a higher capacity one, you’ll need to disassemble the whole thing.

Hopefully, partner systems from the likes of Dell, HPE, Asus, and others will make swapping out storage a bit easier.

The tiniest Superchip

At the Spark’s heart is Nvidia’s GB10 system on chip (SoC), which, as the name suggests, is essentially a shrunken down version of the Grace Blackwell Superchips found in the company’s multi-million dollar rack systems.

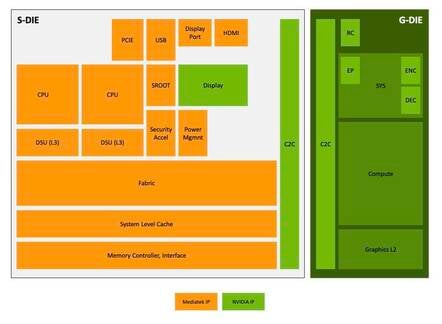

The chip features two dies: one for the CPU and one for the GPU — both built on TSMC’s 3nm process tech and bonded using the fab’s advanced packaging tech.

Unlike its bigger siblings, the GB10 doesn’t use Arm’s Neoverse cores. Instead, the chip was built in collaboration with MediaTek and features 20 Arm cores — 10 X925 performance cores and 10 Cortex A725 efficiency cores.

Here’s a breakdown of the IP making up the GB10. Everything in orange was developed by MediaTek while the green shows elements built by Nvidia – click to enlarge

The GPU, meanwhile, is based on the same Blackwell architecture as we see in the rest of Nvidia’s 50-series lineup. The AI arms dealer claims that the graphics processor is capable of delivering a petaFLOP of FP4 compute. Which sounds great, until you consider that there aren’t all that many workloads that can take advantage of both sparsity and 4-bit floating point arithmetic.

In practice, this means that the most you’ll likely see from any GB10 systems is 500 dense teraFLOPS at FP4.

Both the graphics processor and CPU are fed by a common pool of LPDDR5x, which, as we’ve already mentioned, totals 128 GB in capacity and delivers 273 GBps of bandwidth.

Speeds and feeds

| Architecture | Grace Blackwell |

| GPU | Blackwell Architecture |

| CPU | 20-core Arm (10x X925 + 10x A725) |

| CUDA Cores | 6,144 |

| Tensor Cores | 192 5th-Gen |

| RT Cores | 48 4th-Gen |

| Tensor Perf | 1 petaFLOP sparse FP4 |

| System Mem | 128 GB LPDDR5x 8533 MT/s |

| Memory Bus | 256 bit |

| Memory BW | 273 GBps |

| Storage | 4 TB NVMe |

| USB | 4x USB 3.2 (20 Gbps) |

| Ethernet | 1 RJ-45 (10 GbE) |

| NIC | ConnectX-7 200 Gbps |

| WiFi | WiFi 7 |

| Bluetooth | Bluetooth 5.4 |

| Audio Out | HDMI Multi-channel |

| Peak Power | Adapter power: 240 W |

| Display | 1x HDMI 2.1A |

| NVENC | NVDEC | 1x | 1x |

| OS | Nvidia DGX OS |

| Dimmensions | 150mm x 150mm x 50.5mm |

| Weight | 1.2 kg |

Initial setup

Out of the box, the Spark can be used in one of two modes: a standalone system with a keyboard, mouse, and monitor, or as a headless companion system accessible over the network from a notebook or desktop.

For most of our testing, we opted to use the Spark as a standalone system, as we expect this is how many will choose to interact with the machine.

Setup was straightforward. After connecting to Wi-Fi, creating our user account and setting things like the time zone and keyboard layout, we were greeted with a lightly customized version of Ubuntu 24.04 LTS.

If you were hoping for Windows, you won’t find it here. On the other hand, none of the AI features and capabilities of the system are tied to Copilot or its integrated spyware Recall. That also means that you probably won’t be doing much gaming on the thing until Steam decides to release an Arm64 client for Linux.

But, can it run Crysis?

Can it run Crysis? Probably, but we couldn’t get it to work. Box86, a popular way of getting Steam running on Arm systems like the Raspberry Pi 5, refused to work for us on account of the Arm cores not actually supporting 32-bit instructions.

Most of the customizations Nvidia has made to the operating system are under the hood. They include things like drivers, utilities, container plug-ins, Docker, and the all-important CUDA toolkit.

Managing these is a headache on the best of days, so it’s nice to see that Nvidia took the time to customize the OS to cut down on initial setup time.

With that said, the hardware still has a few rough edges. Many apps haven’t been optimized for the GB10’s unified memory architecture. In our testing, this led to more than a few awkward situations where the GPU robbed enough memory from the system to crash Firefox, or worse, lock up the system.

We had Firefox crash on us on more than a few occasions as the GPU starved the operating system of memory – click to enlarge

Lowering the barrier to entry, a bit

The Spark is aimed at a variety of machine learning, generative AI, and data science workloads. And while these aren’t nearly as esoteric as they used to be, they can still be daunting for newcomers to wrap their heads around.

A big selling point of the DGX Spark is the software ecosystem behind it. Nvidia has gone out of its way to provide documentation, tutorials, and demos to help users get their feet wet.

Alongside the hardware, Nvidia has put together a catalog of beginner friendly playbooks on everything from inference to fine tuning. – click to enlarge

These guides take the form of short, easy-to-follow playbooks, covering topics ranging from AI code assistants and chatbots to GPU-accelerated data science and video search and summarization.

This is tremendously valuable and makes the Spark and GB10 systems feel a lot less like a generic mini PC and more like a Raspberry Pi for the AI era.

Performance

Whether or not Nvidia’s GB10 systems can deliver a level of performance and utility necessary to justify their $3,000+ price tag is another matter entirely. To find out, we ran the Spark through a broad spectrum of fine tuning, image generation, and LLM inference workloads.

After days of benchmarks and demos, the best way we can describe the Spark is as the AI equivalent of a pickup truck. There are certainly faster or higher capacity options available, but, for most of the AI work you might want to do, it’ll get the job done.

Fine tuning

The Spark’s memory capacity is particularly attractive for fine tuning, a process that involves teaching models new skills by exposing them to new information.

A full fine-tune on even a modest LLM like Mistral 7B can require upwards of 100 GB of memory. As a result, most folks looking to customize open models have to rely on techniques like LoRA or QLoRA in order to get the workloads to run on consumer cards. Even then, they’re usually limited to fairly small models.

With Nvidia’s GB10, a full fine tune on a model like Mistral 7B is well within reason, while LoRA and QLoRA make fine tuning on models like Llama 3.3 70B possible.

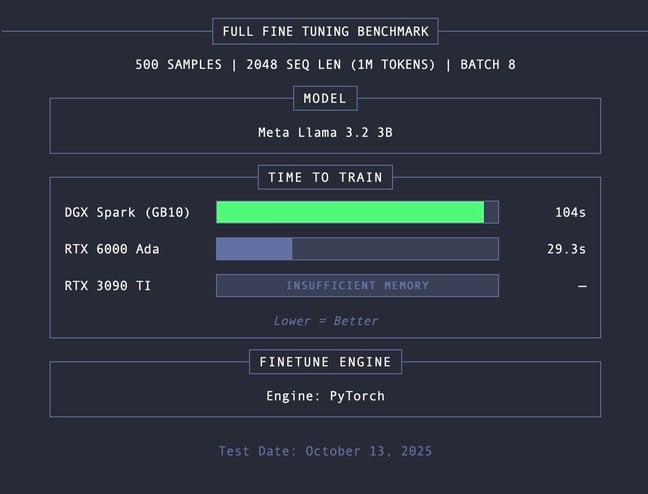

Given the limited time available for testing, we opted to fine tune Meta’s 3 billion parameter Llama 3.2 model on a million tokens worth of training data.

As you can see, with 125 teraFLOPS of dense BF16 performance, the Spark was able to complete the job in just over a minute and a half.

For comparison, our 48 GB RTX 6000 Ada, a card that just a year ago was selling at roughly twice the price of a GB10 system, managed to complete the benchmark in just under 30 seconds.

This isn’t too surprising. The RTX 6000 Ada offers nearly 3x the dense BF16 performance. However, it’s already pushing the limits of model size and sequence length. Use a bigger model or increase the size of each training sample, and the card’s 48 GB of capacity will become a bottleneck long before the Spark starts to struggle.

We also attempted to run the benchmark on an RTX 3090 TI, which boasts a peak performance of 160 teraFLOPS of dense BF16. In theory, the card should have completed the test in a little over a minute. Unfortunately, with just 24 GB of GDDR6X, it never got the chance, as it quickly triggered a CUDA out of memory error.

If you want to learn more about LLM fine tuning, we have a six-page deep dive on the subject that’ll get you up and running regardless of whether you’ve got AMD or Nvidia hardware.

Image generation

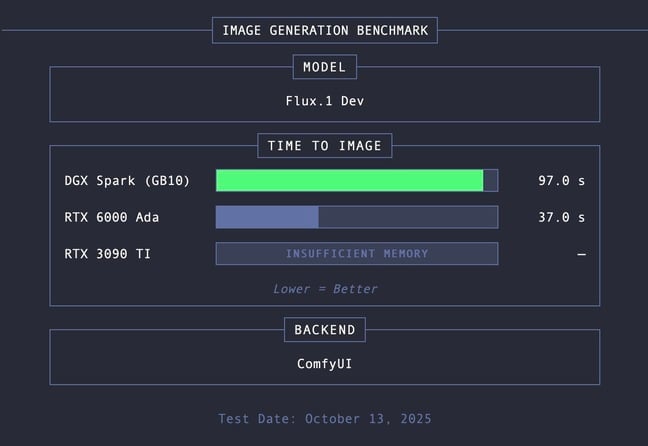

Image generation is another memory-hungry workload. Unlike LLMs, which can be compressed to lower precisions, like INT4 or FP4, with negligible quality loss, the same can’t be said of diffusion models.

The loss in quality from quantization is more noticeable for this class of models, and so the ability to run them at their native FP32 or BF16 precision is a big plus.

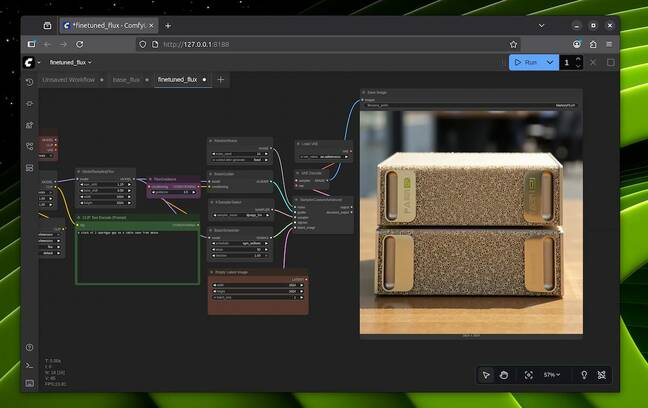

We tested the DGX Spark by spinning up Black Forest Lab’s FLUX.1 Dev at BF16 in the popular ComfyUI web GUI. At this precision, the 12 billion parameter model requires a minimum of 24 GB of VRAM to run on the GPU. That meant the RTX 3090 TI was out once again.

Technically, you can offload some of the model to system memory, but doing so can cripple performance, particularly at higher resolutions or batch sizes. Since we’re interested in hardware performance, we opted to disable CPU offloading.

With ComfyUI set to 50 generation steps, the DGX Spark again wasn’t a clear winner, requiring about 97 seconds to produce an image, while the RTX 6000 Ada did it in 37.

But, with 128 GB of VRAM, the Spark can do more than simply run the model. Nvidia’s documentation provides instructions on fine tuning the diffusion models like FLUX.1 Dev using your own images.

The process took about four hours to complete and a little over 90 GB of memory, but, in the end, we were left with a fine tune of the model capable of generating passable images of the DGX Spark, toy Jensen bobble heads, or any combination of the two.

The results aren’t perfect, but after about 4 hours of fine tuning we were able to train Black Forrest Labs Flux.1 Dev model and show it what a DGX Spark is – Click to enlarge

LLM Inference

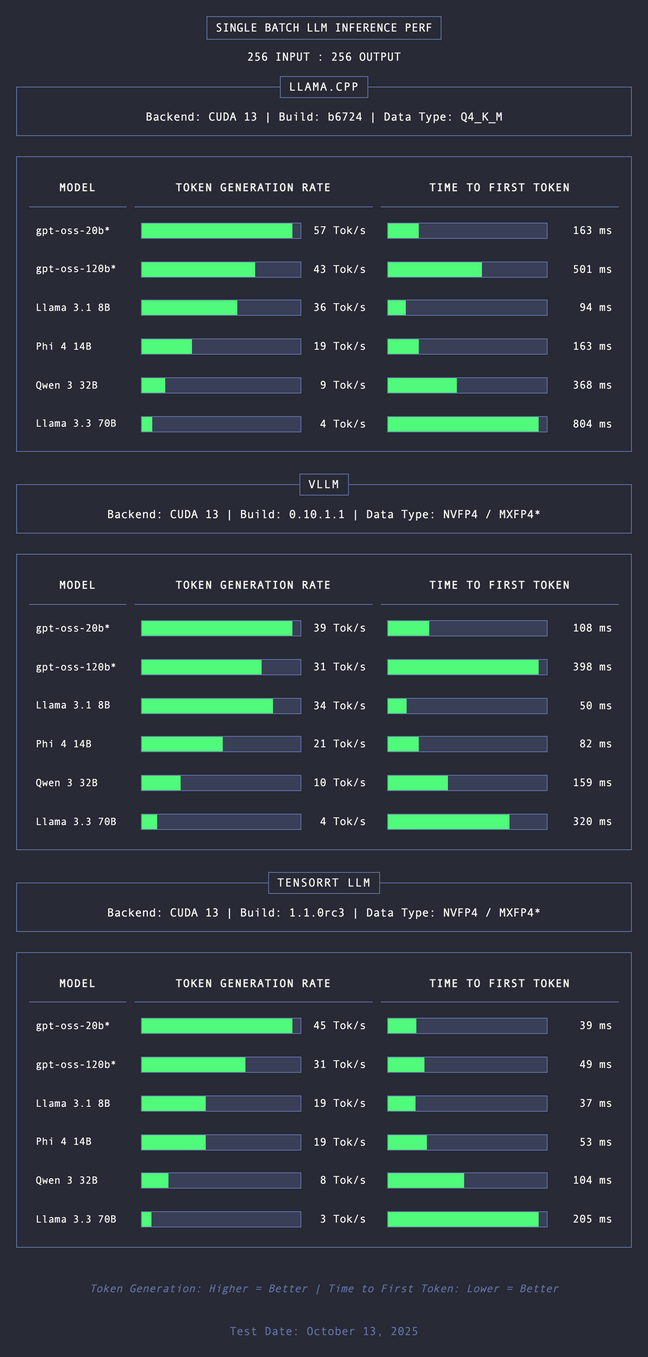

For our LLM inference tests, we used three of the most popular model runners for Nvidia hardware: Llama.cpp, vLLM, and TensorRT LLM.

All of our inference tests were run using 4-bit quantization, a process that compresses model weights to roughly a quarter of their original size, while quadrupling their throughput in the process. For Llama.cpp, we used the Q4_K_M quant. For vLLM and TensorRT LLM, we opted for NVFP4 or MXFP4 in the case of gpt-oss.

Most users running LLMs on the Spark aren’t going to have multiple API requests hitting the system simultaneously, so we started by measuring batch-1 inference performance.

On the left, we measured the token generation rate for each of the models tested. On the right, we recorded the time to first token (TTFT), which measures the prompt processing time.

In our testing, we found that at launch Nvidia’s own TensorRT LLM inference engine delivered the best prefill performance overall – click to enlarge

Of the model runners, Llama.cpp achieved the highest token generation performance, matching, even beating out vLLM and TensorRT LLM in nearly every scenario.

When it comes to prompt processing, TensorRT achieved performance significantly better than either vLLM or Llama.cpp.

We’ll note that we did see some strange behavior with certain models, some of which can be attributed to software immaturity. vLLM, for instance, launched using weights-only quantization, which meant it couldn’t take advantage of the FP4 acceleration in the GB10’s tensor cores.

We suspect this is why the TTFT in vLLM was so poor compared to TensorRT. As the software support for the GB10 improves, we fully expect this gap to close considerably.

The above tests were completed using a relatively short input and output sequence like you might see in a multi-turn chat. However, this is really more of a best-case scenario. As the conversation continues, the input grows, putting more pressure on the compute-heavy prefill stage, making a longer wait for the model to start responding.

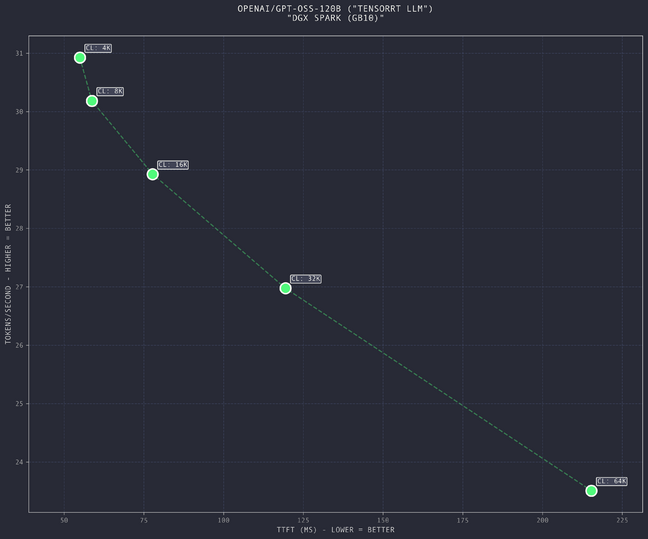

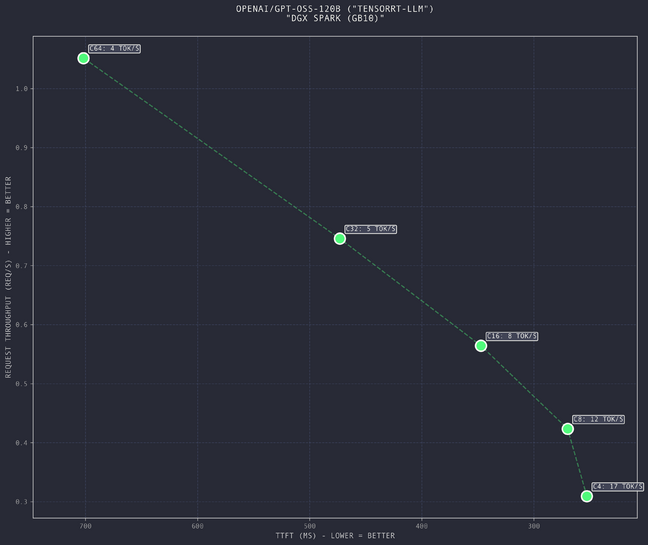

To see how the Spark performed as the context grows, we measured its TTFT (X-axis) and token generation (Y-axis) for gpt-oss-120B at various input sizes ranging from 4096 tokens to 65,536. For this test, we opted to use TensorRT, as it achieved the highest performance in our batch testing.

This graph shows the impact of ever larger input sequences on time to first token and user interactivity (tok/s) – click to enlarge

As the input length increases, the generation throughput decreases, and the time to first token climbs, exceeding 200 milliseconds by the time it reaches 65,536 tokens. That’s equivalent to roughly 200 double-spaced pages of text.

This is incredibly impressive for such a small system and showcases the performance advantage of native FP4 acceleration introduced on the Blackwell architecture.

Stacking up the Spark

For models that can fit within the GPUs’ VRAM, their higher memory bandwidth gives them an edge in token generation performance.

That means a chip with 960 GBps of memory bandwidth is going to be faster than a Spark at generating tokens. But that’s only true so long as the model and context fit in memory.

This becomes abundantly clear as we look at the performance delta between our RTX 6000 Ada, RTX 3090 TI, and the Spark.

Compared to other high-end GPUS from Nvidia, the DGX may not be as fast, but it can run models others cannot

As models push past 70 billion parameters, memory bandwidth becomes irrelevant on all but the most expensive workstation cards, simply because they don’t have the memory capacity necessary to run them anymore.

And sure, both the 3090 TI and 6000 Ada can fit medium-size models like Qwen3 32B or Llama 3.3 70B at 4-bit precision, but there’s not much room leftover for context. The key value cache that keeps track of something like a chat, can consume tens or even hundreds of gigabytes depending on how big the context window is.

Multi-batch performance

Another common scenario for LLMs is using them to extract information from large quantities of documents. In this case, rather than processing them sequentially one at a time, it’s often faster to process them in larger batches of four, eight, 16, 32, or more.

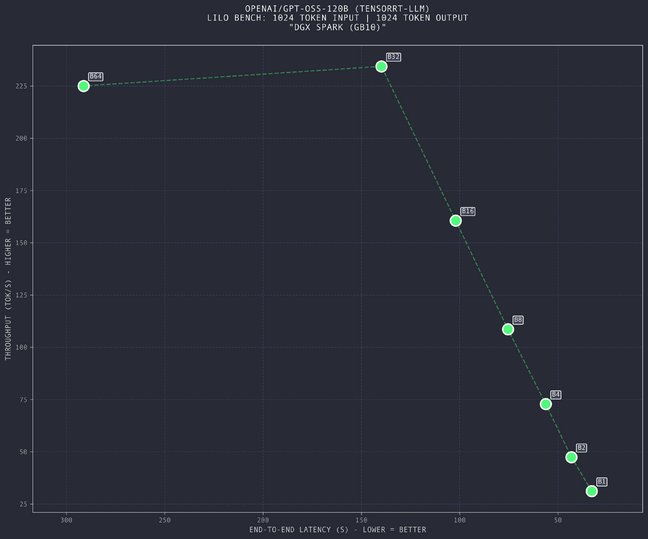

To test the Spark’s performance in a batch processing scenario, we tasked it with using gpt-oss-120B to process a 1,024 token input and generate a 1,024 token response at batch sizes ranging from one to 64.

This graph charts overall throughput (tok/s) against end-to-end latency at various batch sizes ranging from 1-64 – click to enlarge

On the X-axis, we’ve plotted the time in seconds required to complete the batch job. On the Y-axis, meanwhile, we’ve plotted overall generative throughput at each batch size.

In this case, we see performance plateaus at around batch 32, as it takes longer for each subsequent batch size to complete. This indicates that, at least for gpt-oss-120B, the Spark’s compute or memory resources are becoming saturated around this point.

Online serving

While the Spark is clearly intended for individual use, we can easily see a small team deploying one or more of these as an inference server for processing data or documents locally.

Similar to the multi-batch benchmark, we’re measuring performance metrics like TTFT, request rate, and individual performance at various levels of concurrency.

Similar to the multi-batch benchmark, this graph depicts the performance characteristics for various numbers of concurrent requests – click to enlarge

With four concurrent users, the Spark was able to process one request every three seconds while maintaining a relatively interactive experience at 17 tok/s per user.

As you can see, the number of requests the machine can handle increases with concurrency. Up to 64 concurrent requests, the machine was able to maintain an acceptable TTFT of under 700 ms, but that comes at the consequence of a plodding user experience as the generation rate plummets to 4 tok/s.

This tells us, in this particular workload, the Spark has plenty of compute necessary to keep up with a large number of concurrent requests, but is bottlenecked by a lack of memory bandwidth.

With that said, even a request rate of 0.3 per second is a lot more than you think, working out to 1,080 requests an hour — enough to support a handful of users throughout the day with minimal slowdowns.

The DGX Spark’s real competition

As we alluded to earlier, the DGX Sparks’ real competition isn’t consumer or even workstation GPUs. Instead, platforms like Apple’s M4 Mac Mini and Studio or AMD’s Ryzen Al Max+ 395 based systems, which you may recognize by the name Strix Halo, pose the biggest challenge.

These systems feature a similar unified memory architecture and a large quantity of fast DRAM. Unfortunately, we don’t have any of these systems on hand to compare against just yet, so we can only point to speeds and feeds. Even then, we don’t have complete information.

| Nvidia DGX Spark | Nvidia Jetson Thor | Apple M4 Max | AMD Ryzen AI Max+ 395 | |

| OS | DGX OS | ? | MacOS | Windows / Linux |

| FP/BF16 TFLOPS | 125 | 250 | ? | 59 est |

| FP8 TFLOPS | 250 | 500 | ? | ? |

| FP4 TFLOPS | 500 | 1000 | ? | ? |

| NPU TOPS | NA | NA | 38 | 50 |

| Max Mem Cap | 128 GB | 128 GB | 128 GB | 128 GB |

| Mem BW | 273 GBps | 273 GBps | 546 GBps | 256 GBps |

| Runtime | CUDA | CUDA | Metal | ROCm / HIP |

| Price | $3000-$3,999 | $3.499 | $3,499-$5,899 | $1999+ |

Putting the DGX Spark in that context, the $3,000-$4,000 price tag for a GB10-based system doesn’t sound quite so crazy. AMD and its partners are seriously undercutting Nvidia on price, but the Spark is, at least on paper, much faster.

A Mac Studio with an equivalent amount of storage on the other hand is a fair bit more expensive but boasts higher memory bandwidth, which is going to translate into better token-generation. What’s more, if you’ve got cash to burn on a local token factory, the machine can be specced with up to 512 GB on the M3 Ultra variant.

The Spark’s biggest competition could however come from within. As it turns out, Nvidia actually makes an even more powerful Blackwell-based mini PC that, depending on your config, may even be cheaper.

Nvidia’s Jetson Thor developer kit is primarily designed as a robotics development platform. With twice the sparse FP4, 128 GB of memory, and 273 GBps of bandwidth, the system offers a better bang for your buck at $3,499 than the DGX Spark.

Thor does have less I/O bandwidth with a single 100 Gbps QSFP slot that can be broken out into four 25 Gbps ports. As cool as the Spark’s integrated ConnectX-7 NICs might be — we haven’t had a chance to test them just yet — we expect many folks considering one would have happily forgone the high-speed networking in favor of a lower MSRP.

Summing up

Whether or not the DGX Spark is right for you is going to depend on a couple of factors.

If you want a small, low-power AI development platform that can pull double duty as a productivity, content creation, or gaming system, then the DGX Spark probably isn’t for you. You’re better off investing in something like AMD’s Strix Halo or a Mac Studio, or waiting a few months until Nvidia’s GB10 Superchip inevitably shows up in a Windows box.

But, if your main focus is on machine learning, and you’re on the market for a relatively affordable AI workstation, there are a few options that tick as many boxes as the Spark. ®

First Appeared on

Source link