How we sharpened the James Webb telescope’s vision from a million kilometres away

After Christmas dinner in 2021, our family was glued to the television, watching the nail-biting launch of NASA’s US$10 billion (AU$15 billion) James Webb Space Telescope. There had not been such a leap forward in telescope technology since Hubble was launched in 1990.

En route to its deployment, Webb had to successfully navigate 344 potential points of failure. Thankfully, the launch went better than expected, and we could finally breathe again.

Six months later, Webb’s first images were revealed, of the most distant galaxies yet seen. However, for our team in Australia, the work was only beginning.

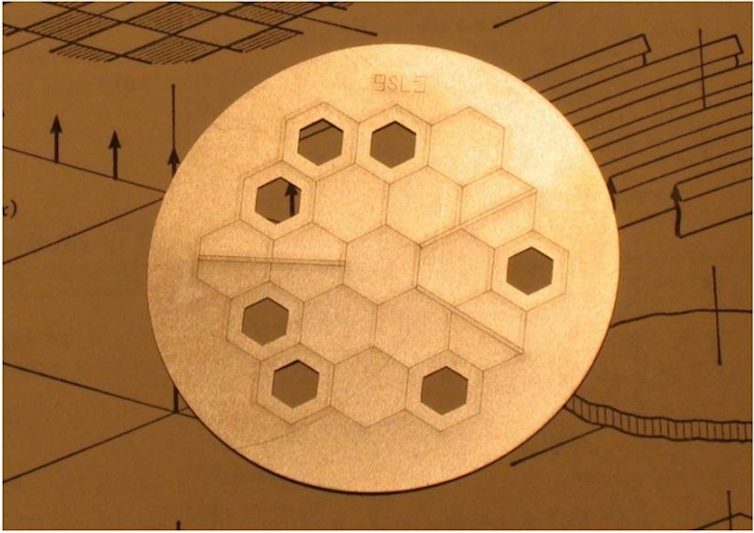

We would be using Webb’s highest-resolution mode, called the aperture masking interferometer or AMI for short. It’s a tiny piece of precisely machined metal that slots into one of the telescope’s cameras, enhancing its resolution.

Our results on painstakingly testing and enhancing AMI are now released on the open-access archive arXiv in a pair of papers. We can finally present its first successful observations of stars, planets, moons and even black hole jets.

Working with an instrument a million kilometres away

Hubble started its life seeing out of focus – its mirror had been ground precisely, but incorrectly. By looking at known stars and comparing the ideal and measured images (exactly like what optometrists do), it was possible to figure out a “prescription” for this optical error and design a lens to compensate.

The correction required seven astronauts to fly up on the Space Shuttle Endeavour in 1993 to install the new optics. Hubble orbits Earth just a few hundred kilometres above the surface, and can be reached by astronauts.

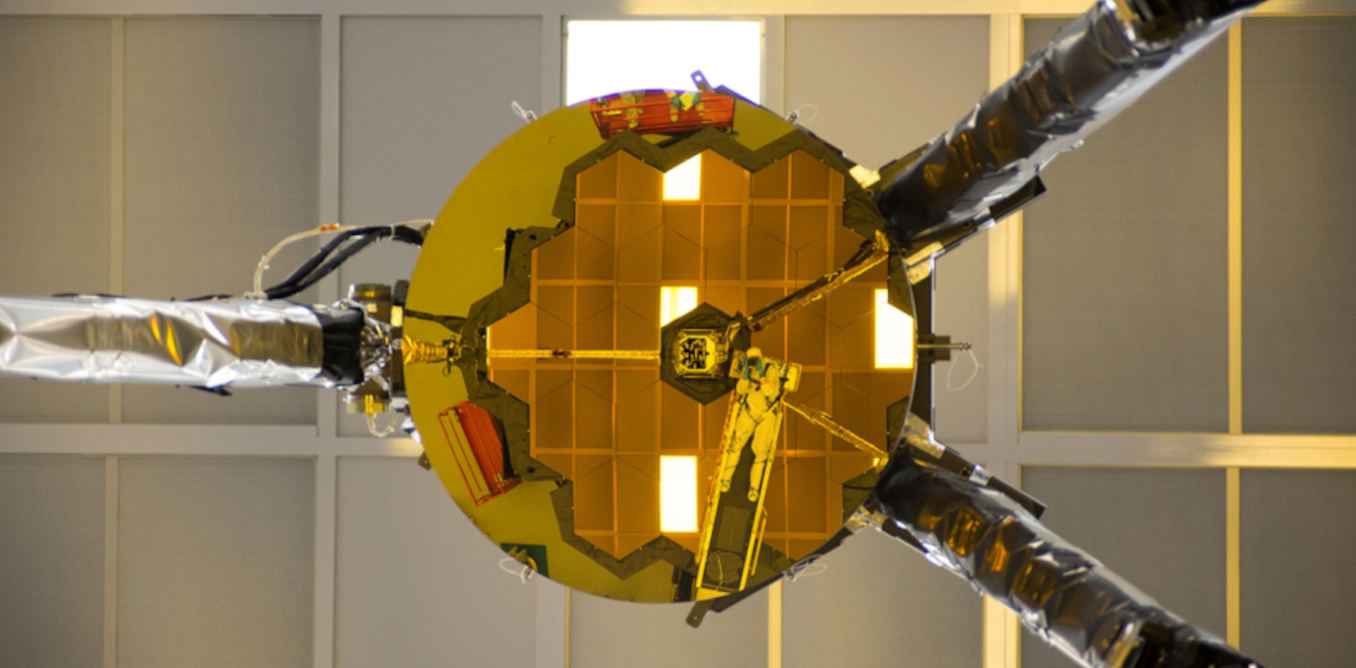

NASA/Chris Gunn

By contrast, Webb is roughly 1.5 million kilometres away – we can’t visit and service it, and need to be able to fix issues without changing any hardware.

This is where AMI comes in. This is the only Australian hardware on board, designed by astronomer Peter Tuthill.

It was put on Webb to diagnose and measure any blur in its images. Even nanometres of distortion in Webb’s 18 hexagonal primary mirrors and many internal surfaces will blur the images enough to hinder the study of planets or black holes, where sensitivity and resolution are key.

AMI filters the light with a carefully structured pattern of holes in a simple metal plate, to make it much easier to tell if there are any optical misalignments.

Anand Sivaramakrishnan/STScI

Hunting blurry pixels

We wanted to use this mode to observe the birth places of planets, as well as material being sucked into black holes. But before any of this, AMI showed Webb wasn’t working entirely as hoped.

At very fine resolution – at the level of individual pixels – all the images were slightly blurry due to an electronic effect: brighter pixels leaking into their darker neighbours.

This is not a mistake or flaw, but a fundamental feature of infrared cameras that turned out to be unexpectedly serious for Webb.

This was a dealbreaker for seeing distant planets many thousands of times fainter than their stars a few pixels away: my colleagues quickly showed that its limits were more than ten times worse than hoped.

So, we set out to correct it.

How we sharpened Webb’s vision

In a new paper led by University of Sydney PhD student Louis Desdoigts, we looked at stars with AMI to learn and correct the optical and electronic distortions simultaneously.

We built a computer model to simulate AMI’s optical physics, with flexibility about the shapes of the mirrors and apertures and about the colours of the stars.

We connected this to a machine learning model to represent the electronics with an “effective detector model” – where we only care about how well it can reproduce the data, not about why.

After training and validation on some test stars, this setup allowed us to calculate and undo the blur in other data, restoring AMI to full function. It doesn’t change what Webb does in space, but rather corrects the data during processing.

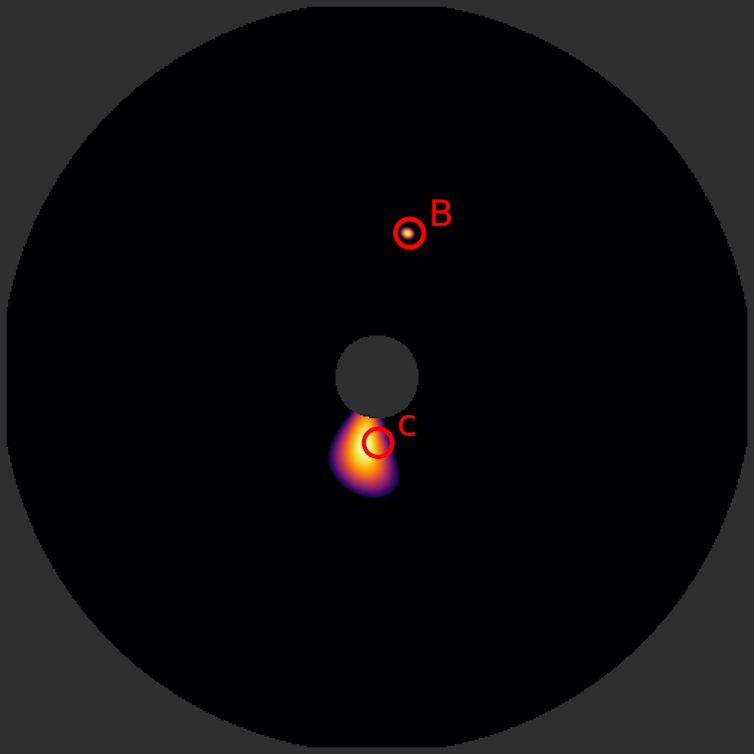

It worked beautifully – the star HD 206893 hosts a faint planet and the reddest-known brown dwarf (an object between a star and a planet). They were known but out of reach with Webb before applying this correction. Now, both little dots popped out clearly in our new maps of the system.

Desdoigts et al., 2025

This correction has opened the door to using AMI to prospect for unknown planets at previously impossible resolutions and sensitivities.

It works not just on dots

In a companion paper by University of Sydney PhD student Max Charles, we applied this to looking not just at dots – even if these dots are planets – but forming complex images at the highest resolution made with Webb. We revisited well-studied targets that push the limits of the telescope, testing its performance.

Max Charles

With the new correction, we brought Jupiter’s moon Io into focus, clearly tracking its volcanoes as it rotates over an hour-long timelapse.

As seen by AMI, the jet launched from the black hole at the centre of the galaxy NGC 1068 closely matched images from much-larger telescopes.

Finally, AMI can sharply resolve a ribbon of dust around a pair of stars called WR 137, a faint cousin of the spectacular Apep system, lining up with theory.

The code built for AMI is a demo for much more complex cameras on Webb and its follow-up, Roman space telescope. These tools demand an optical calibration so fine, it’s just a fraction of a nanometre – beyond the capacity of any known materials.

Our work shows that if we can measure, control, and correct the materials we do have to work with, we can still hope to find Earth-like planets in the far reaches of our galaxy.

First Appeared on

Source link