NVIDIA’s AI Servers Have Seen a Whopping 100x Rise in Power Consumption Over the Years — Can the World Meet AI’s Growing Energy Needs?

NVIDIA’s AI servers have experienced a significant increase in power requirements, raising questions about the sustainability of this growth.

NVIDIA’s Jump From Ampere To Kyber Will Bring Up To 100x Increase In Power Consumption, In Just Eight Years

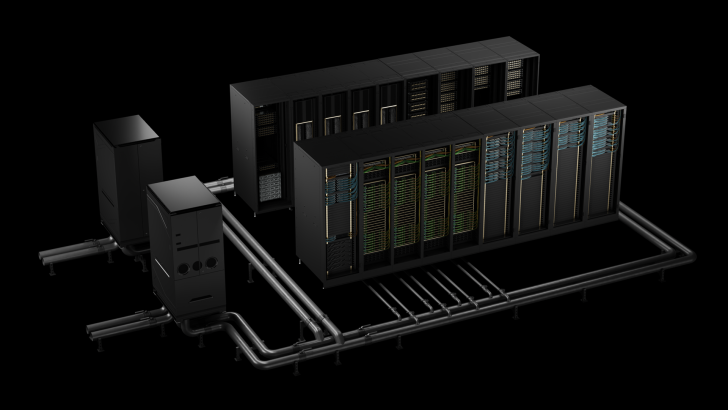

The AI industry is rushing towards scaling up computing capabilities, either through hardware-level advancements or by increasing the process of setting up large-scale AI clusters. As firms like OpenAI and Meta pour their resources into achieving feats like artificial general intelligence (AGI), manufacturers like NVIDIA have become significantly more aggressive with their product offerings. In an illustration shared by analyst Ray Wang, it is revealed that NVIDIA’s AI server platform is experiencing a significant increase in power requirements with each generation, and the transition from Ampere to Kyber marks a 100-fold increase in energy consumption.

The major increase in power ratings of NVIDIA’s rack generations comes due to a variety of factors, but the most important ones are the rise in GPUs per rack, each seeing a generational increase in TDP ratings. For example, with Hopper, systems ran at a 10 KW per chassis rating, but with Blackwell, due to the increased number of GPUs, this rose to almost 120 KW. Team Green hasn’t given up on addressing the computing needs of the industry, which is why the energy consumption of rack-scale solutions is growing at an alarming rate.

Other factors, such as the use of advanced NVLink/NVSwitch fabrics, newer rack generations, and consistent rack utilization, have led to energy consumption by hyperscalers rising at an unprecedented rate. Interestingly, Big Tech is now involved in the race of ‘who’s up a larger AI rack-scale campus’, and he metric to measure it has evolved into ‘giga-watts’, with firms like OpenAI and Meta aiming for more than 10 GW of computing capacity being added in the upcoming years.

For quick reference, estimates suggest that 1 GW of energy consumption from AI hyperscalers could power approximately 1 million US homes, excluding factors such as cooling and power delivery requirements. Factor this into mega-campuses being built by Big Tech, and then you’ll see that AI energy consumption is growing at a pace that individual facilities now consume power equal to that of a mid-size country or several large US states, and this is a serious concern, one that the Trump administration has repeatedly talked about.

IEA’s 2025 ‘Energy & AI” research estimates that AI alone would lead to a doubling of electricity consumption by 2030, nearly four times the grid’s growth rate. And more importantly, one of the consequences of rapidly building data centers around the globe would be increased costs of household electricity, especially in regions located near these large-scale buildouts. Ultimately, America, as well as independent nations involved in the AI race, faces a grave energy problem.

Follow Wccftech on Google or add us as a preferred source, to get our news coverage and reviews in your feeds.

First Appeared on

Source link